The last two years have seen the global retail industry’s most extraordinary digital transformation. The pandemic pushed more shopping and delivery online, causing a dramatic shift in customer behavior and preferences.

As consumers’ reliance on digital devices continues to grow, mcommerce (mobile commerce, i.e., shopping on a mobile device) is poised to burst into the mainstream.

Insider Intelligence predicts that mobile will inch closer to becoming consumers’ preferred channel for online shopping within the next five years. It’s no wonder mobile consumer spend is expected to reach $728 billion globally by 2025.

Below, we look at the road ahead for mcommerce growth, highlighting the key stats, trends, and strategies to help mobile retail apps stay ahead of the curve.

Trend #1: Consumers are Spending More on Mobile

Despite historically suffering poor conversion rates due to bad user experience, customer frustration, and trying to checkout on a small screen, smartphones have now become the driving force behind mcommerce growth.

The rise in app usage and spending power among the next generations of mobile consumers will be major contributors to growth and sales volumes.

And the data certainly reflects this:

- In the US, mcommerce is growing at an average annual growth rate of 25%.

- Mobile retail sales hit $359.32 billion in 2021, an increase of 61.4% from 2019.

- Sales are tipped to reach $436.75 billion in 2022—a 21.5% increase from the previous year.

- By 2025, mcommerce sales are predicted to reach a staggering $728.28 billion.

- Each year, mobile accounts for a greater portion of overall ecommerce sales. In 2019, it accounted for 36.9% of total e-commerce sales, growing to 38.5% in 2021 and predicted to hit 44.2% by 2025. In the last quarter of 2021, smartphones generated 56% of online shopping orders in the US.

- On a global level, mcommerce makes up 72.9% of all ecommerce sales. That’s a 39% increase from just five years ago. In other words, almost three out of every four dollars spent on online purchases today is done so through a mobile device.

- 79% of all smartphone users have purchased using their mobile devices in the last six months and 75% say they purchase from their mobile device because it saves them time.

Trend #2: Shopping Apps Are Growing

According to the latest state of mobile report from App Annie, mobile shopping habits created by the pandemic have not only solidified but grown.

Consumers globally spent 18% more time in shopping apps in 2021 compared to the previous year, with fast fashion, social shopping, and big brands leading the way.

Shopping is the fastest-growing mobile app category, with 54% year-on-year growth. According to Adjust, the average install-to-register rate of a mobile shopping app increased from 29.8% in 2019 to 32.8% in 2020. The install-to-purchase rate was 14.7%, up from 10.5% in 2019.

The data also reveals that mobile apps perform better than mobile websites:

- Apps convert at least three times better than mobile websites.

- The average order value from a mobile app is $10 more than a mobile website.

- Mobile app users make twice as many purchases as mobile website users.

- Mobile apps have a 30% lower cart abandonment rate than mobile web.

- 51% of mobile shoppers in the US make their purchases via apps.

- Brands can see the opportunity this brings, as 46% of retailers plan to invest more time in building custom mobile apps in 2022.

Trend #3: Consumer Priorities Have Changed

Five years ago, price competitiveness and product variety were priorities for customers shopping online. Today, customer experience is the driving force behind retail brand loyalty—even beyond price.

The costs of a poor experience are too high to ignore:

- As many as 90% of shoppers say their experiences with mobile commerce could be improved, with over 60% less likely to purchase from a brand in the future if they have a negative mobile experience.

- According to Google, 60% of buyers say transaction speed contributes to their decision to purchase or not.

- Disruptions, trends, and innovations cause these paradigm shifts in consumer behavior that can be difficult to predict. But one thing is for sure: customers continue to look for compelling, personalized experiences that meet them where they are.

- 80% of people are more likely to do business with a company if it offers personalized experiences, and 90% find personalization appealing.

- 66% of consumers expect brands to understand their individual needs.

- 61% of mobile consumers are more likely to buy from mobile sites that customize information to location and preferences.

- 67% of consumers think it’s important for brands to automatically adjust their content to reflect the current context.

Of course, these changes in consumer preferences place additional pressure on retailers to provide a seamless end-to-end experience that is personalized across channels. Those that can achieve this will put themselves ahead of the competition.

In-App Purchase Strategies

Why is mobile retail growing at such a fast pace? Well, it’s pretty obvious—people are spending more screen time on their mobile devices. But why?

Part of this is driven by technology—smartphones are getting faster, screens are getting bigger, and the overall mobile experience is improving.

As a result, consumers are becoming increasingly reliant on their mobile devices. Just think: mobile users today can reach into their pockets and buy almost anything imaginable in just a few taps. So, a lot of this growth can be attributed to the convenience of mobile shopping. Three out of four consumers say they carry out purchases on their mobile devices because it saves time.

So, what can brands do to leverage the growth that’s happening in the mobile retail space?

Strategy #1: Retain Users by Optimizing Onboarding

An optimized onboarding experience is crucial for gaining shoppers’ trust and leading them down the path to making a purchase in your app.

Begin with an appealing welcome screen

Your welcome screen should convey your value proposition as concisely as possible. Try to strike a balance between communicating your unique selling point and creating anticipation so that users want to explore your app.

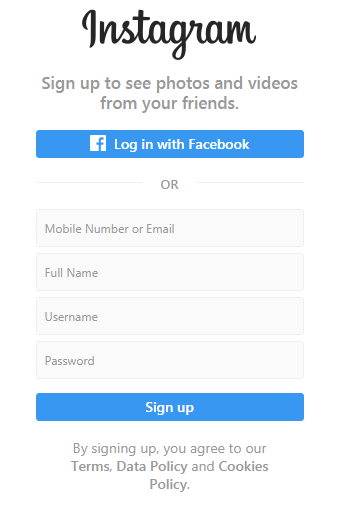

Keep sign up simple

The sign-up stage is where most apps see a significant drop-off, losing up to 56% of shoppers. However, it can make a massive difference in activation and retention rates if executed well. Keep required fields to a minimum and think about what information could be asked for at a later stage instead.

Provide intuitive tooltips

Onboard users progressively and educationally. As they navigate your app, provide modals, tips, guidance, insights, hints, and nudges to contextually educate them throughout their behavior.

Read more: App Onboarding: Creating Engaged Users From the Start

Strategy #2: Build Loyalty Beyond Rewards

Customer loyalty is no longer about a discount-heavy transactional model. Instead, customer experience is now the number one factor driving ecommerce brand loyalty.

So, how can you elevate loyalty beyond just competing on price?

Know your customers

Reexamine your customer journey map to understand when your customers are asking for help. Then, be part of their journey from the very beginning rather than trying to fight for the sale near the end. Retailers that can earn customer trust and insert themselves into the decision-making process early on are better positioned to win not just sales but lasting customer loyalty.

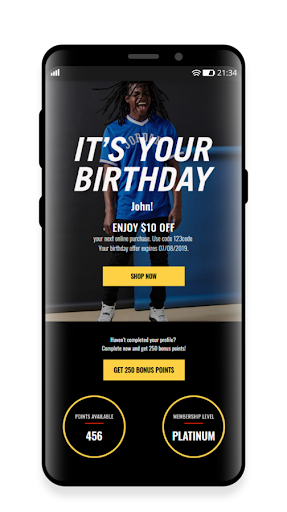

Data-driven personalization

A study by Accenture found that 91% of customers are more likely to become repeat buyers after a customized experience with a brand.

And it’s easy to see why—content, offerings, and recommendations that are relevant, timely, and accurately based on a user’s behavior are much more likely to be perceived as helpful.

Leverage your users’ data to give customers exactly what they want, when, and where they want it.

Deliver relevant rewards

Of course, there is still a time and place for rewards in driving brand loyalty. However, to maximize the customer experience, cater your offers to the unique user.

Personalized incentives build stronger emotional connections and inspire users to continue supporting your brand than generic promotions.

Read more: Customer Loyalty—Strategies to Retain and Reward Loyal Customers

Strategy #3: Reduce Cart Abandonment

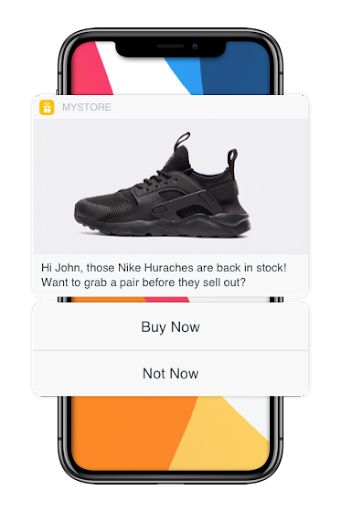

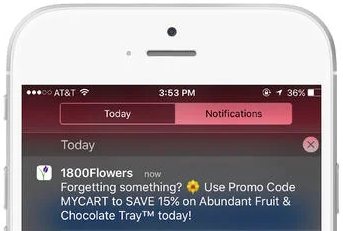

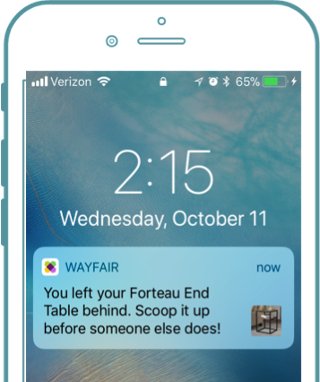

More than 69% of all transactions are abandoned without completion making cart abandonment one of the biggest challenges facing retail businesses. The good news is approximately 60% of these sales are recoverable through meaningful push notifications.

Deliver a promotional offer

A push notification that includes an incentive such as a discount or promo code can be powerful in enticing an unsure buyer to complete a purchase.

Create a sense of urgency

A limited-time price incentive or notification about stock running low on a particular item can encourage a user to make a move.

Get personal

Instead of a basic reminder of a product in a cart, call out the specifics. Add the name of the customer and the product they were interested in purchasing. Be as specific to where the user was in the checkout process as possible.

Read more: The Best Push Notifications to Send to Reduce Cart Abandonment